Lunar NeRFs

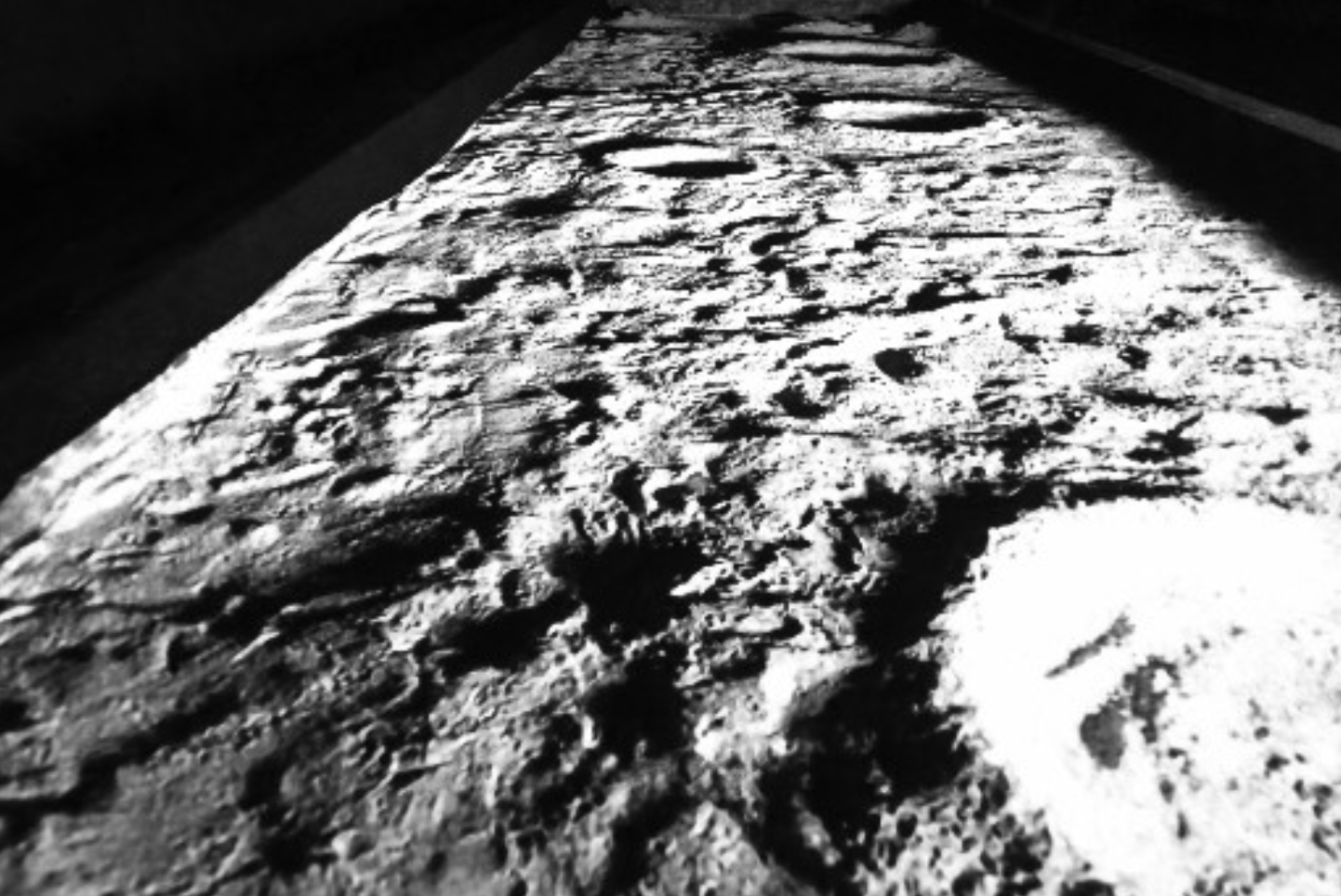

Neural radiance fields (NeRFs) are learned, neural representations of a density and color field that can be used to render novel views of a scene via volumetric rendering. These techniques are a hot topic in the graphics community, but questions abound about their applicability to mobile robotics. Some algorithms capable of online learning of a scene have been proposed (see iMAP and NeRF-SLAM for examples), but these are very new methods that model room-scale scenes and require high-quality GPUs. Planetary robotics poses a challenge for such techniques due to the often extreme lighting conditions, lack of features in a scene, and limited motion of the image sensors, in addition to the problem of compute. This project seeks to assess the practicability of using NeRFs for modeling lunar terrain, with a focus on geometric aspects in addition to photometric.

Paper: M. Hansen, C. Adams, T. Fong, and D. Wettergreen, “Analyzing the Effectiveness of Neural Radiance Fields for Geometric Modeling of Lunar Terrain,” accepted at IEEE Aerospace Conference, Big Sky, MT, USA, 2024.

Dataset: This work uses data published in the POLAR Traverse Dataset.